Data import guide

This document explains how the import tool provided in the XTreeM platform works. This platform is used by Sage Distribution and Manufacturing Operations. As the import tool supports a given data structure, the examples shown in this document use Sage Intacct entities.

A zip file containing examples of every import mentioned in the chapters is available with this document. If you use the standard templates provided in an empty tenant, you will be able to import successively every CSV file supplied.

A second appendix has been added showing the evolution done in the latest releases.

Integrating data in Sage Distribution and Manufacturing Operations requires to understand preliminary concepts about data organization. This is the purpose of the next chapter.

Basic principles

In every ERP system, information is highly interconnected. Starting a new ERP requires finalizing the data to ensure a good transition. This includes:

- Core data (for example customers, suppliers, items, BOMs)

- Backlog

- Historical data (for example the pending orders, stock details, closed transaction history)

Manually entering this data is rare, usually data is extracted from the old system and imported into Sage Distribution and Manufacturing Operations, with a focus on ensuring consistency.

You can create or update records for entities in two ways:

- Using APIs for mutations on these entities. It is less convenient because you need to write code. But it can be efficient to manage regular updates through a predefined interface.

- Working with a tool to integrate a large number of lines, for example, if they are extracted from a previous system. In this case, managing CSV files is the most common way to import data. Sage Distribution and Manufacturing Operations uses this CSV format with any node with an available creation or update method.

The import tool simplifies data entry by using a standard CSV format and enforcing the same validation rules as the interactive entry process. Any document that does not pass these checks will be rejected.

Import functions

Two pages are available to manage imports:

- Import and export templates

- Import data

Templates definitions

A templates describes a CSV format and lists the properties from a main entity called node. You can generate a CSV file from the template. This CSV file includes a mandatory line listing the code of properties to import with specific syntaxes to define groups and sub-groups. The file also includes 2 non-mandatory lines defining the data type and a description.

Creating a template from scratch by entering a node name includes all the properties from the data dictionary. You can update it by suppressing non-mandatory information. The presetting data includes default simplified templates to make faster the onboarding of a customer.

Import function

The import function uploads a CSV file containing data to import.

It has the same header than the CSV file generated from a template, but it can be a subset of properties if the mandatory ones are present. The following lines contain the data values to be imported. At upload time, a sanity check is done on the file.

When the upload is done, you can execute the import. This will create or update records, depending on the option chosen. If both choices are selected, the record existence will be checked to execute the update, otherwise a creation will be performed. Errors will occur if existing records are found in creation mode only, or if records that don’t exist are found in update mode only.

Export of data

An import template can also be used as an export template. Every information given in this document for import format is also valid when you export data. This is possible from the main lists available for every entity. When you select a record in the list, an icon will appear to export data using one of the appropriate templates. Exporting and reimporting data is a common practice during implementation projects.

For example using the same templates for export and import you can work on:

- Dedicated implementation environment

- Finalizing the data

- Making manual adjustments

- Exporting the dataset to import it in the production environment

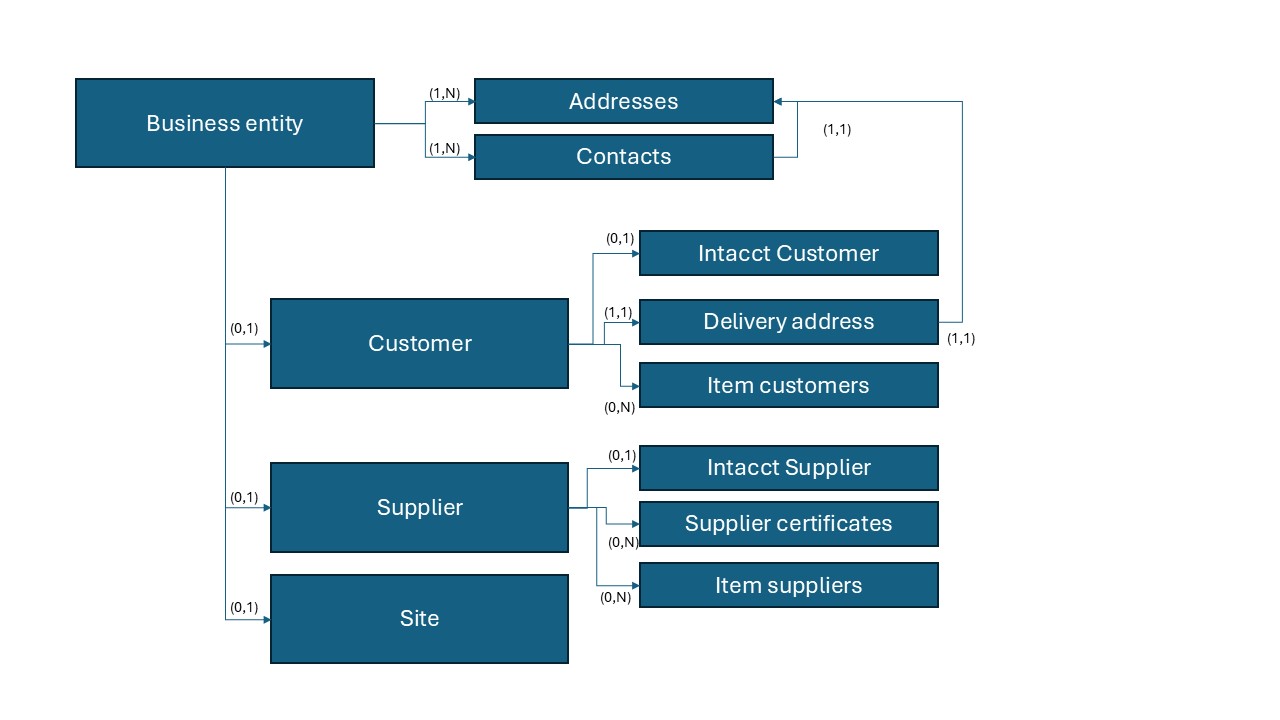

Data organization in Sage Distribution and Manufacturing Operations

Sage Distribution and Manufacturing Operations is based on GraphQL entities called nodes.

Node definition

A node is an entity that manages records, sets of data, and properties. The following operations can be associated with a node:

- Read operations:

- read to read a record

- query to read several records

- lookup to do a query on a subset of properties for selection purposes in the user interface.

- Operations performing updates, called mutations in the GraphQL terminology:

- Create, Update, and Delete are the standard mutations.

- A node can also have specific mutations such as revert for an accounting entry, post for a sales invoice, and block for a customer.

A node includes properties that can be of any type:

- Classic types such as strings, numeric values, Boolean, enumerations, dates, pictures, rich texts, JSON structures, and so on.

- References to other nodes. For example, a customer can have a delivery mode reference which is another node.

- Collections of references to other nodes. A customer can have several addresses, every address being a node. References can be vital or non-vital.

- A vital reference cannot exist without the origin node. This is the case for order lines attached to a sales order.

- A vital reference can continue to exist if the main node has been deleted. For example, if a customer record references several sales representatives managing the account, deleting a customer doesn’t remove the sales representatives.

Every node record has technical properties, such as creation and update date-time and user, but also a unique identifier (long integer) called _id that is assigned automatically at its creation and does not change during the life of the record.

Keys, references, and setup data

Most entities have at least one unique key called the natural key. This key is used to identify:

- A record in a data entry flow such as an import.

- References to other records in the referenced nodes.

The natural key can have more than one component. You can choose the names for your natural key's components. If you have a unique component for setup data, it is usually called id.

Examples of natural keys are the item ID, a customer code, a country normalized code, a document number and so on. An existing natural key is unique and can be used for searching purpose notably in the user interface. The natural key is not used to manage the referential integrity in the database structure. The referential integrity is based on a unique key value called _id. This means renaming the natural key values for records has no impact on the linked records.

When importing data, we also use the natural key to identify the references as it is the code known by the customers. For example, when importing a sales order, the customer code, the item code, and the payment terms are all identified by their external code which is the natural key. The import will transform these natural keys into the corresponding _id when updating data.

Presetting records

Nodes storing presetting are usually delivered with content at the creation of an empty tenant. This allows software editors to deliver presetting that simplify customer onboarding. This is the case for units of measure, payment terms, countries, and so on.

When an update of the software happens, presetting records can be completed and updated to comply with changes in regulations. You can also add your own records in these entities. The system makes the difference between the supplied presetting and the additional records as there is a vendor code called _vendor in the GraphQL schema, in the setup entity.

When this _vendor code has the sage value, the record is a standard one. You cannot modify such a record: The id and most properties cannot be changed. There can be some exceptions depending on the entity. Sometimes you can deactivate standard setup records and change their description. The editable properties on a standard setup remain unchanged, but an upgrade of a tenant can trigger an automatic update of other properties.

Otherwise, it is a record added afterward. In this case, you can modify the corresponding key values and the other data. Applicative restrictions can apply to the changes.

During the import procedure, the reference to such a setup entity is performed with the natural key, which includes a property usually called id. Sometimes, there is more than one component.

In some cases, when an array of references exists, you can have a _sortValue present in the key.

This is, for example, the case for the addresses associated with a business entity. The _sortValue is a numeric value that starts at 10 and is incremented by steps of 10. This means that if you want to reference the third address of the JOHNDOE customer in a document, the values you need to use to make the reference are JOHNDOE and 30.

Vital and non-vital reference

References can be vital or non-vital:

- A vital reference cannot exist without the origin node. This is the case for order lines attached to a sale order.

- A non-vital reference record can exist even if the main node has been deleted. If a customer record references several sales representatives managing the account, deleting a customer does not mean that the sales reps will disappear.

Vital children can be unique for a record (an address on a sales order), or multiple (a collection of lines in a sales order).

Complex document and vital children

The import and export engine can, in a single file:

- Import nodes and its vital children in cascade

- Export the same data, and data linked to non-vital children

A file that is exported from a tenant can be re imported using the same import template. If non-vital data is exported, it will be ignored in import.

Let’s take some examples for an item record:

- In an item import file, you can have:

- item records

- several item or site (vital children)

- Then for each item or site you can have several valuation records (vital child of item or site). It can all be imported together.

- You can also export the name, the symbol, or other information related to this unit. This is non-vital related data. It will simply be ignored during the import, you cannot create an item and its unit at the same time during an import.

This shows how the data structure will appear when defining an import template:

Data structure when defining an import template

You do not have to keep all this information when you import data. In some cases like for sales order where at least a line record is necessary, you can create files that don’t include all these groups.

Direct import of virtual children in creation and modification

In some cases, you can import directly vital children of a node for an existing record. Item and site records can be imported during an item import as a vital collection. You can also import item and sites records for existing items by using an item and site import template.

When the following conditions are fulfilled, this import is possible in modification:

- A natural key exists directly for the vital child. This is the case for item and site, where a natural key with the item code and the site code, exists.

- When a natural key exist for a parent record, an additional _sortValue is available to define automatically a natural key for the vital collection child.

In the case of item, customer and price vital collection, where the natural key is the item code, the customer code, and a _sortValue property. Modifying the records directly with an item, customer and price template requires to have the 3 components in the file. If you create records with the same template, the _sortValue component can be added if you want to force the value, but it is not mandatory. It will have a computed default value.

Note that a direct import of vital children is not always possible.

- If you create a template for a child node that doesn't support import, the template will automatically be set to Export only.

- When using a template for an import, the system will automatically disable the modification mode is a modification is impossible.

- When an import in modification is performed, if the _sortValue is mandatory to identify the record because no other key exist, the system will send an error if the field isn't there.

GraphQL schema and use for APIs

Using GraphQL is the only way to access the relational database used by Sage Distribution and Manufacturing Operations. A user dealing with APIs or a developer has no direct SQL access to the database. This ensures security. Rights are granted on nodes and operations, securing tenant isolation.

A GraphQL operation can only access the tenant it has been connected to. No limit exists on the accessible data if a node is published.

You can use the GraphiQL tool to navigate across nodes and find them. You can access the GraphiQL tool directly from the main URL of the tenant, with the /explorer/ extension. It is the easiest way to navigate across the schema and look at the different nodes that are available. You can also find information in the documentation. The main page of this tool looks like this:

GraphQL API sandbox main page

You can enter queries or mutations with the GraphQL syntax in the first column. The results display in the middle panel. You can access the schema directly in the right panel.

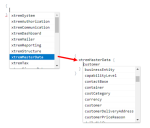

Nodes are associated with a package. Auto-completion features provide a list of packages. When you select one from the list, you can get the list of nodes.

List of nodes

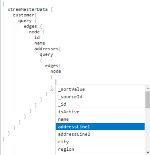

After selecting a node, you can choose the properties you want. If a property is a reference, you can pick the properties you need. This is done using a query/edge/node structure to list all the records.

List of properties

If a property is a collection of references, you can embed a query with an array of records by adding another query/edge/node nested structure. You can do this whether the collection is vital or not.

Nested structure

Data format definition

CSV Global structure for files to be imported

Character set

The CSV files can be encoded either in ASCII or in UTF-8 without a BOM header. This allows to import national and special characters. The fields are separated by semicolons.

Using Excel to create CSV files

When creating CSV files with Excel, you need to separate the fields wit the List separator used in Windows regional settings.

In some countries the default list separator is a comma. In European countries, a comma is reserved for the decimal symbol and the list separator is generally set to semicolon. Because a semicolon is expected for the csv files imported by the import tool, make sure your regional settings are set correctly on Wondows:

- Open your Regional settings.

- Click on Administrative language settings.

- Click on Additional settings.

- Update the List separator with a semicolon.

If you have texts with special characters such as accents, make sure you save the file in the right format.

Structure description

The CSV format has columns of data separated by a semi-colon:

- The header line defines what the different columns of the CSV file represent:

- For a single property, its name is given, prefixed by ! if it is the record's main key, and by * if the information is mandatory. This * or ! character is only present on the first line.

- If a reference is done with the natural key, it is identified by the referenceName syntax, where referenceName is the property to be imported. referenceName(_id) is used if the _id value is supplied to retrieve the linked record.

- Arrays of references can be identified by a group of data and will be explained further in this document.

- Two additional lines can be present to describe the structure, but they are not mandatory. Additional lines that must not be considered as data are identified with the word IGNORE after the last column.

- These 3 lines have the same format as the template file. Every additional line represents a record.

Basic format with single-line records

- The header line lists the properties to import.

- The next two lines are optional and are documentation oriented. The IGNORE value at the end prevents the import tool from considering them as data to import.

- The other lines with no IGNORE value at the end contain data to import.

|

!id |

*name |

description |

type |

isBought |

volumeUnit |

volume |

image |

|

|

string |

string |

localized text |

enum(service,good) |

boolean |

Reference |

Decimal |

binaryStream |

IGNORE |

|

id |

name |

|

Type |

is bought(false/true) |

volume unit |

Volume |

image |

IGNORE |

|

A100 |

ABC |

ABC product |

Good |

TRUE |

Liter |

0.2 |

|

|

|

PRES |

Prest |

Prestation |

Service |

FALSE |

Hours |

|

In this example:

- id is the record key, prefixed by !, and has a string data type.

- name is a mandatory string field, prefixed by *, containing the item’s name.

- description is a non-mandatory string containing the item’s description.

- type is an enumeration, with two choices in its values list: service or good.

- isBought is a true or false value, false being the default value because it is the first mentioned in the list. It defines if the item is bought or not.

- volumeUnit is a reference to the unitOfMeasure node. The natural key of the property is usually called SetupId. It is used to define the referenced unitOfMeasure record.

- volume is a decimal value.

- image is a binary stream that can be entered as a base64-coded string. In this example, it is a picture.

- description is a translatable text. The default text language is your connection language when you import data.

This document later describes how to import texts in other languages.

References, vital children, and collections in the header and lines

Frequently, the data to import is structured in header and line records. Sometimes, a single record can have several line sets and even nested line sets.

For example, if an order has order lines, every order line can have sub-lines if there are different delivery dates. This is done by splitting the total quantity of the line.

Another type of line can simultaneously be associated with the same header. For example, a list of comment lines, with each comment line being a single record.

It corresponds to a property in the main node which is an array of references on nodes containing lines. There are also cases with a unique reference to a sub-structure. For example, a sales order that contains a specific address.

The CSV format allows importing data of lines associated with header information if the lines are vital references to the main node.

You can also import the header and lines separately when the following situations happen together:

- Lines are not mandatory in a document.

- A creation operation exists on the corresponding node.

In the xtremSales package, the salesOrder node includes a lines collection that is a vital reference to the salesOrderLine node.

- As this vital reference cannot be empty because there must be at least one line in the sales order, importing a sales order requires having at least one line imported with it.

- As the salesOrderLine node does not have a creation operation associated with it, the only way to create a sales order is to embed the header information (salesOrder properties) and the lines (salesOrderLine properties) in the CSV file.

In the xtremMasterData package, the item node includes an allergen property that is a vital collection on itemAllergens nodes that store the item's allergens if it is food.

- Allergen is not a mandatory collection but a vital one.

- You can either import a record with a product and at the same time, additional records for each allergen associated with it or import item records and itemAllergens records separately.

The item node also contains a collection of itemCustomer references. This collection represents the customer-linked information for items.

- As this is not a vital reference collection, you cannot import the information related to the items and their customers' related reference in the same file.

- Because a vital collection can only have one parent, Sage chose to consider itemCustomer as a vital collection for the customer. This means that you can import customer records and their associated item information in a single file.

CSV format for the header and lines

When a vital collection is on a node, the properties of the collection's members are present on the node just after the property that represents the collection.

- The property that represents the collection is prefixed by a #. This indicates that the following properties are coming from a child node.

- If a sub-collection exists in a child collection, the property corresponding to the sub-collection is prefixed by ##. Every additional nesting level is defined with an additional #. You can have two collections at the same level, but nested collections must follow the group they are nested in.

- List the main record's properties before describing any nested collection.

- A sub-node that is not a collection starts with / instead of #. You can also nest such groups. Such sub-nodes are often addresses and contracts on business documents.

You need to place the records on different lines in the correct nesting order.

This file contains only a header line and data to be imported. It corresponds to a sub-set of item information:

- The first group of information contains the item's main data.

- The second group starts with #allergens.

- It defines the item-allergen node, which contains the allergens associated with a food product. This node includes a reference to the item and to the allergen.

- The only necessary property to list here is allergen, as a reference of the allergen entity.

- #allergens is the property that defines the nested collection. No real value is expected here. But, if this column is empty, the system interprets it as a set of lines. You can use a counter for the line, but any non-empty value is correct.

- The third group is the classification group for chemical products. It follows the same principles as the previous groups.

- The fourth group is the item-sites group. It references sites and various numeric values.

|

!id |

*name |

description |

type |

isBought |

#allergens |

allergen |

#classifications |

classification |

#itemSites |

site(id) |

prodLeadTime |

safetyStock |

|

PIEA |

APPLPIE |

Apple pie |

good |

TRUE |

1 |

EGG |

||||||

|

PIEA |

APPLPIE |

Apple pie |

good |

TRUE |

2 |

MIL |

||||||

|

PIEA |

APPLPIE |

Apple pie |

good |

TRUE |

3 |

CCW |

||||||

|

PIEA |

APPLPIE |

Apple pie |

good |

TRUE |

|

1 |

NAT009 |

2 |

100 |

|||

|

PIEA |

APPLPIE |

Apple pie |

good |

TRUE |

2 |

DEPS01 |

3 |

300 |

||||

|

CHO |

CHOCO |

Chocolate |

good |

TRUE |

1 |

DEPS01 |

5 |

25 |

||||

|

PRES |

INST |

Installation |

service |

FALSE |

In the table above:

- The first item has header information, 3 lines related to allergens, and 2 lines associated with sites.

- Then there is another product with only one sub-level corresponding to one site.

- And finally, a fourth product with no sub-details.

To import the allergens associated with the Chocolate item separately, you can use the following file related to the item-allergen entity.

|

!item |

!allergen |

|

CHO |

MIL |

|

CHO |

SOY |

There are 2 main records associated with 2 values. The first is the reference to the item, and the second refers to the allergen.

You can import a simple file like this with the same item template:

For different groups and sub-groups with similar status and name properties, the header can look like this:

| !id | *name | description | type | isBought |

| PIEA | APPLPIE | Apple Pie | good | TRUE |

| CHO | CHOCO | Chocolate | good | TRUE |

| PRES | INST | Installation | service | FALSE |

By default the standard template includes the useful column available and every additional group that can be imported at the same time.

You can create files to be imported without the columns you don’t want to import if they are not mandatory, and groups you don’t want to import, as long as you don’t need one detail record for a header.

If you keep the columns associated with a sub-group, and if there is data in there, the mandatory fields in sub-groups must be filled.

If you look at the sales order template, you will notice that you have many groups (sales order header, sales order lines, addresses). It can go up to 16 groups of data. But, without changing the template, you can import a file that has two groups of data only (header and lines), and only 5 columns. All the other fields will be defaulted when the import is performed.

| *soldToCustumer | *salesSite | *requestedDeliveryDate | #lines | *item |

| CUS-000003 | S01-FR | 2023-02-01 | 1 APPLE_PIE | |

| CUS-000003 | S01-FR | 2023-02-01 | 2 BANANA_PIE | |

| CUS-000003 | S02-FR | 2023-02-01 | 1 APPLE_PIE |

Duplicate property names

In a header, every property must be unique. But sometimes, properties can have the same name on different nested groups, for example, name, status, or amount.

When this happens, you need to add a suffix to the property, starting with a #, so the column's title is unique.

For different groups and sub-groups with similar status and name properties, the header can look like this:

| id | status | name | #line | status#1 | name#1 | ##subline | name#2 | #address | status#2 | name#3 |

This header has one line group, with a subline nested group, and one address group.

Properties named status exist in these three groups: their property codes are status, status#1, and status#2.

There are four different property codes for names: name, name#1, name#2, and name#3.

Properties format

Dates

Dates must be in the DD-MM-YYYY format where DD is the day, MM the month, and YYYY the year. The separator must be a dash.

Date ranges

You can now define a single filed as a date range in itemPrices. The format is the following:

[YYYY-MM-DD, YYYY-MM-DD] where the first date is the first boundary of the range and the second is the end of the rang. The brackets are used when the boundary of the dates are included in the range. The dates are excluded if parenthesis are used instead.

For example, [2024-01-01,2024-01-05] means from the first to the fifth of January included, whereas [2024-01-01,2024-01-05) means from the first to the fourth of January included (the fifth being excluded).

This format can be used for export and import, but when we export, the range is always given with a [YYYY-MM-DD, YYYY-MM-DD) format.

Numeric values

Numeric values must be in decimal format. If needed, the decimal separator must be a point. No separator is allowed for 3-digit groups. A minus sign is allowed for negative numbers.

For example, -12345.6 is valid, whereas 12,345.6 is not.

Enumeration

An enumeration is defined by a restricted list of choices. In the user interface, such a field appears as a combo box and is translated into the user’s connection language.

In an import file, you need to use the internal code. The second header of a standard template gives the list of codes that you can use.

For example, enum(purchasing,production) is used for replenishmentMethod in the item template.

Strings

A string can include any character, except carriage returns and double quotes. If you need to manage double quotes in a string, enter the whole string in double quotes and add another double quotes to it inside the quote:

APPLE_PIE ;""Apple pie 10"" diameter ;Apple Pie ;10

It can be imported as a 4 fields line with 3 alphanumeric line and a numeric line.

Pictures

You can add a picture in Base64 format. It then becomes a string that can reach a big size.

Boolean

A Boolean can be represented by TRUE and FALSE values, either in uppercase or in lowercase.

Localizable texts

You can store texts such as product descriptions and retrieve them in different languages as an array of texts associated with a list of language codes.

This process is almost transparent for the user. Depending on the language used for the connection, the text displays in the correct language. If the text is unavailable in the language, there is a base text with a default value.

A language code can be a two-character code compliant with ISO 639-1. It can be en, fr, de, es, it, and so on.

When you connect, you usually see a language code associated with a country code, for example, en-US, en-GB, fr-FR, fr-CA, es-ES, or es-AR.

If the language code used for connection does not correspond to an existing translation for a text, the system tries to see if there is an equivalent text associated with the two first characters of the language code. For example, the following translations are stored in the database:

- base: Text 1

- en: Text 2

- fr: Text 3

- en-GB: Text 4

- en-US: Text 5

- fr-FR: Text 6

If you connect with:

- fr-FR, Text 6 is used because it is a perfect match.

- fr-CA, Text 3 is used because the first two characters match.

- pt, Text 1 is used because it is the default base text.

When you create a record through import with just the property name as a header, only the base text is filled. But you can create several columns in your template by adding the language code between parenthesis just after the property name.

| description | description(en-US) | description(en-GB) | description(fr-FR) | description(es) |

| Text1 | Text2 | Text3 | Text4 | Text5 |

If a record is created with this import file:

- Text1 is stored as the base value.

- Text2 is stored as a value for en and for en-US.

- Text3 is stored as a value for en-GB.

- Text4 is stored as a value for fr and fr-FR.

- Text5 is stored as a value for es.

When you update an existing record through import, only the exact matches are filled. In this case:

- The base value is updated with Text1.

- The en-US and the en-GB are created (if they don’t exist) or modified with the Text2 and Text3 values.

- The en translation is not filled if it doesn’t exist. This means that a user connecting to the user interface with en-AU as locale displays the base value.

- If the fr translation and the fr-FR translation exist, the fr translation is not changed, whereas the fr-FR translation is updated with Text4.

- If the es-ES translation exists, it is not modified; but the es translation is updated with Text5.

References

References are managed by the natural key. This key must be unique. It can be a single or a composite key. When several segments exist in a key value, they are separated by the pipe (|) character.

Some setup records have a setupId property that corresponds to the natural key. It can also be an id property.

For tables that are not setup data, the natural key is usually the code of the record. For example, the document number, item code, or customer ID.

References on multiple child nodes with the _sortValue property

When a reference points to a child node associated with a main entity that does not have another unique key, a technical property called _sortValue can be in the second part of the key. This ensures having a unique key. For a given main entity key, the _sortValue values are all different.

To refer to one of these addresses for a customer import, you need to enter the key as a composite key with the business entity key followed by the _sortId value.

|

*isActive |

!businessEntity |

minimumOrderAmount |

*paymentTerm |

isOnHold |

postingClass |

#deliveryAddresses |

isActive |

isPrimary |

*shipToAddress |

shipmentSite |

*deliveryMode |

deliveryLeadTime |

incoterm |

|

TRUE |

CUS-000004 |

150 |

DUE_UPON_RECEIPT_ALL |

FALSE |

CUSTOMER_DOMESTIC |

1 |

TRUE |

FALSE |

CUS-000004|10 |

S01-ZA |

ROAD |

5 |

CIF |

|

TRUE |

CUS-000004 |

150 |

DUE_UPON_RECEIPT_ALL |

FALSE |

CUSTOMER_DOMESTIC |

2 |

TRUE |

TRUE |

CUS-000004|20 |

S02-ZA |

SEA |

5 |

FOB |

|

TRUE |

CUS-000004 |

150 |

DUE_UPON_RECEIPT_ALL |

FALSE |

CUSTOMER_DOMESTIC |

3 |

TRUE |

FALSE |

CUS-000004|30 |

S03-ZA |

RAIL |

3 |

CIP |

The first delivery address refers to the business entity's first address, the second to the second address, and the last to the third address.

This works because the business partner was imported with its addresses as a nested collection of lines.

|

!id |

isActive |

legalEntity |

*name |

*country |

*currency |

taxIdNumber |

#addresses |

isActive#1 |

*name#1 |

addressLine1 |

addressLine2 |

city |

region |

postcode |

country |

|

string |

boolean |

enum(corporation,physicalPerson) |

string |

reference |

reference |

string |

collection |

boolean |

string |

string |

string |

string |

string |

string |

reference |

|

id |

is active (true/false) |

legal entity |

name |

country |

currency |

tax id number |

addresses |

is active (true/false) |

name |

address line 1 |

address line 2 |

city |

region |

postcode |

country |

|

CUS-000004 |

TRUE |

corporation |

Customer #000004 |

ZA |

ZAR |

1234 |

1 |

TRUE |

CUS-000004-MAIN |

2 Apple Tree Road |

|

JOHANNESBURG |

|

ZA |

|

|

CUS-000004 |

TRUE |

corporation |

Customer #000004 |

ZA |

ZAR |

1234 |

2 |

TRUE |

CUS-000004-PLANT1 |

2 Cherry Tree Road |

|

DURBAN |

|

ZA |

|

|

CUS-000004 |

TRUE |

corporation |

Customer #000004 |

ZA |

ZAR |

1234 |

3 |

TRUE |

CUS-000004-PLANT2 |

2 Banana Tree Road |

|

CAPE TOWN |

|

ZA |

|

|

CUS-000004 |

TRUE |

corporation |

Customer #000004 |

ZA |

ZAR |

1234 |

4 |

TRUE |

CUS-000004-PLANT3 |

2 Pineapple Tree Road |

|

PRETORIA |

|

ZA |

In this case, _sortValue is assigned numeric values starting at 10 and increasing by 10.

You can import addresses separately with a template based on BusinessEntityAddress. If you do so, the _sortValue is assigned with a starting value based on the business entity's _id internal key multiplied by 100.

There is no easy way to know which value to use when this happens. To assign a given value to _sortValue during a future import, you can add _sortValue to the template, even if this property is not present in the template generated by default. In the grid below, this has been done to import a business entity address with a _sortValue equal to 100.

|

*name |

addressLine1 |

addressLine2 |

city |

region |

postcode |

country |

locationPhoneNumber |

!businessEntity |

_sortValue |

isPrimary |

|

string |

string |

string |

string |

string |

string |

reference |

string |

reference |

number |

boolean |

|

name |

address line 1 |

address line 2 |

city |

region |

postcode |

country (#id) |

location phone number |

business entity (#id) |

sort value |

is primary (true/false) |

|

Additional address |

Quai des brumes |

|

NANTES |

|

44000 |

FR |

|

CN001 |

100 |

FALSE |

Note that a _sortValuecan be present without being part of the unique key. In this case, the _sortValue property is just present to sort the data when presented in a grid

JSON fields

Columns can be defined as JSON data. When this happens, you need to put the whole JSON field between 2 sets of double quotes to escape the usual double quotes needed in JSON.

This line has 3 fields. The second one is a JSON field.

FirstField;"{""column1"":""value1"",""column2"":""value2""}"; ThirdField

The FirstField and ThirdField values are assigned to the entity's first and the third fields.

The second field is a JSON property filled with the following JSON structure:

{"column1":"value1",

"column2":"value2"

}

Mandatory fields

Mandatory fields are prefixed by:

- * in the first line of the template

- ! if it is the record identifier

The mandatory columns must be in the file to be imported if you select the Creation checkbox and launch the import.

If you launch the import in update mode only, the mandatory columns can be absent from the file. In this case, the value does not change for the updated records. The natural key that identifies the record to modify is mandatory.

If you launch the import for creation and update, the mandatory columns must be present, even if every record present in the file already exists in the database. If values are missing in a column and the record already exists, the update is done with a null value. An error occurs if the field is mandatory. If you want to update only some columns and keep the others unchanged, use a format that does not contain them and perform an import in update mode only.

The following grid summarizes how empty values are managed for mandatory properties.

| Import mode for the record | A default value exists | The column is present in the file | A value is present on the line | Result |

|---|---|---|---|---|

|

Creation |

Yes

|

No |

|

Default value is used |

|

Yes |

No |

Default value is used |

||

|

Yes |

Line value is used and checked |

|||

|

No |

No |

|

Global error |

|

|

Yes |

No |

Error for the line |

||

|

Yes |

Line value is used and checked |

|||

|

Update

|

Yes |

No |

|

No update for the property |

|

Yes |

No |

Error for the line |

||

|

Yes |

Line value is used and checked |

|||

|

No |

No |

|

No update for the property |

|

|

Yes |

No |

Error for the line |

||

|

Yes |

Line value is used and checked |

If a property is not mandatory in update mode, the rules are the following:

- If a column is present in the file, the value replaces the previous one. If the column is present with no value, erase a previous value during the import. As an empty value is allowed, the system follows the rule.

- For translatable text, importing a column that does not mention the language replaces the base value. It is recommended to indicate the language to be sure to update the right text. You can use the fieldname(base) syntax if you only want to replace the base text.

- If a column is not present in the file, the previous value will not be modified.

For localizable texts, as we can have several columns for different lines the situation is a little bit different:

- If there is a column that doesn't mention the language, the value will replace the base value. Add the language to make sure you update the right text. To replace only the base text, use the syntax fieldname(base).

- If the text is mandatory, several columns are given in the file with different languages and we are in creation mode:

- At least one of the columns must be filled. The first one will fill the base description as well as the description for the right language code.

- If descriptions are not given for some languages, ignore them and do not set the corresponding translation to an empty string. The base translation will be used for these languages.

In modification mode, translations not given for some languages will not change the existing translation if some existed before.

Data import procedure

This section explains how to generate templates and import data in Sage Distribution and Manufacturing Operations.

Generate the template

Open: Administration > Import and export > Import and export templates

The import tool is based on templates created by entering an ID, a name, and the node name.

To create an import or export template, select the node name and enter a name and an ID for your template. The corresponding description is filled in by default, but you can simplify it.

The Template colums grid displays the list of fields and the corresponding groups.

In this grid, you can:

- Use the arrows to move fields up and down.

- Add a field by clicking the Insert button and selecting a property from the group's available fields and collections.

- Delete properties or an entire group.

Adding a field with the + button adds a property after the current one.

A field displays the CVS file header of a template that can be used to enter data. Only the first line of the template is necessary. You can select the Data type and Description checkboxes to generate the data type and description in the created CSV file. They are not selected by default. Selecting the Data type checkbox provides the list of applicable choices for an enumeration field.

You need to use a template to import a CSV file. The file that contains the data to import must at least have the first line present in its template. The 2 other lines are documentation and are not mandatory. A CSV data file can have less columns than the template if all the mandatory columns are present. The order of the fields can also be different in the file if the structure of the separate groups is in the right order. Groups of fields must be before child groups.

Select the Generate template button to download a CSV file. You can open the file, enter data, and then import the corresponding file.

If you have data on the entity you want to import, you can select a record with relevant values and export it to get a standard header and a data sample.

Import data

Open: Administration > Import and export > Import data

This page has 2 tabs:

- Data

- Import results

A file can contain thousands of lines that take a long time to process. So, the import performs asynchronously.

Data tab

First, upload the file to import with the Browse files button located under the Select file section.

The upload can be long, so a progression bar displays. Do not leave the page before the progression bar reaches 100% because this interrupts the operation.

At the end of the upload, you can:

-

Select the operations to perform:

- Insert to create new records

- Update to update existing records

If you only select Insert, existing records return errors. If you only select Update, the non-existent records return errors. If you select both, the system creates records or updates them depending on the situation.

- Perform a consistency check by selecting Test import. The file is read without triggering any operation. All the consistency checks are done. For example, the data format, the existence of the references, and so on. As a test import does not create records, some errors controlled by the database do not occur at this stage. For example, an undetected error can be an import in creation mode only with the same record twice in the import file.

- Chose the behavior to adopt when errors occur. The import works record per record, including its children. If a record or one of its children is incorrect, the whole record import fails. The import task continues to import the following records if you select the Continue and ignore the error checkbox. The import stops when you reach the maximum number of records in error entered in the Maximum number of errors field. An error does not cancel the import of the valid records already imported. There is no rollback.

- Select the template to use.

- Select the Import button to launch the import task. A confirmation box followed by a confirmation message display.

Import results tab

On the Import results tab, you can list the files submitted with the current template and see their status:

- Pending means that the submission is taken in account, but that the job did not start yet.

- In progress means that the import is still running.

- Completed means that the file was completely processed. In this case, some records can not be integrated. The Error detail column indicates the errors found. You can download the file containing the errors from the line. Refer to the Error management chapter for more information on errors.

- Failed means that the format of the file was not correct. For example, it can be an error such as a missing mandatory column in the header, a column mentioned that does not exist in the header, or an incorrect format of the header. If this happens, a global error message displays when you select the line.

You can select the Refresh button to refresh the grid while imports are in progress.

Error management

Record-level errors

The import manages separately every group of records linked to the main entity and creates the document in a separated transaction. For example, if you import documents with 3 nested sub-levels, any error at any level of the document rejects the document, but the next document is imported if no error is detected.

When launching an import, you can decide whether the import stops or continues when errors happen. The Maximum number of errors field indicates the number of lines in error allowed before stopping the import. If you set this number to 1, the import stops at the first error, but all the documents that were correct before the error occurred are imported.

The system copies the documents if error found during the import in a separate file that has the same format as the original file. This file has an additional _error column at the end. This column gives the error message explaining why the document import failed. You can directly edit the error file, fix the data to avoid errors, and re-import the error file. The system ignores the _error column at import time. This allows you to import data in several steps, for example:

- You can run an import with a higher limit for the number of errors, ideally until you reach the end of the file.

- You can recycle the documents in error by fixing them and reintegrating them until there is no error.

This example shows what happens when you try to import an allergen record on an item that is a chemical component.

|

!id |

name |

description |

status |

type |

isSold |

stockUnit(id) |

volume |

weightUnit(id) |

weight |

attribute |

isStockManaged |

#classifications |

classification(id) |

#allergens |

allergen(id) |

|

CHLORINE |

Chlorine |

Chlorine |

active |

good |

TRUE |

l |

1 |

kg |

0.9 |

CHEMICAL |

TRUE |

1 |

EGG |

If you follow this example, the line is rejected during the import. You can go to the Import results tab to download the import's log file.

This file has the same format as the import file, with an additional _error column, which gives the error message that has been found.

In this case, it can look like this:

|

!id |

name |

description |

status |

type |

isSold |

stockUnit(id) |

volume |

weightUnit(id) |

weight |

attribute |

isStockManaged |

#classifications |

classification(id) |

#allergens |

allergen(id) |

_error |

|

CHLORINE |

Chlorine |

Chlorine |

active |

good |

TRUE |

l |

1 |

kg |

0.9 |

CHEMICAL |

TRUE |

1 |

EGG |

attribute: Allergens are only allowed on food items. |

Global errors

When there are global inconsistencies in the header, the error displays in the Results grid's Error detail column.

Select the line to view the error's details.

Error management summarized

- The errors are managed at document level. If an error is detected on a line or a sub-line, the whole document is rejected.

- When importing data, set up the number of errors you would accept before stopping the import. If you set this value to 1, the import will stop immediately on the first faulty document. If some correct documents were present in the CSV file in previous lines they will be correctly imported. A good policy is to put a maximum value if you are testing the import. This gives you all the erroneous lines at once allowing you to correct them before running the import for the work in production.

- Having no error in the test mode doesn't guarantee the import in production will work. Some errors linked to the creation or updated cannot be detected in the work in production. This is especially the case if a key becomes a duplicate value because a previous record has been imported in the same file.

- If a customer is not allowed to create sales order to a given site, importing the document on this site will create an error. The user need to have the right access rights to be able to run the import without errors.

- The definition of a document depends on the template used.

- If a template linked to item and site is used, every record will be an item and site definition. Only lines linked to sites unauthorized to a given user will be rejected. The other lines assigned to authorized sites will be imported as they are autonomous documents.

- If a template linked to item is used and includes item-site information, a line linked to sites unauthorized to a given user will trigger the rejection of the whole item. The lines assigned to authorized sites will also be rejected.

Creation mode and update mode

You can import data in creation mode, update mode, or both. If you choose to use them both, the import engine will check if the record already exists. If it does, an update will be done. Otherwise a creation will be done.

When a vital child collection exists, the natural key can be:

- Directly defined in the child record. For example, the item and site collection, which is a vital child of the item collection, has a natural key composed of just the item and the site. These two properties are sufficient to uniquely identify a record. When you display the item and site records on the item page, the _sortValueis used to order the record. It is not part of the natural key. For item and site import in modification, _sortValue is not mandatory, although it can modified through this import.

- Inherited from the parent with a natural key. An import in modification mode requires to have _sortValue in the import file. In this case, the _sortValue is part of the natural key of the child record. It uses the natural key of the parent and the_sortValue. This is the case for the item customer and price, it is a vital child of item and it needs a _sortValue to build a natural key. An import in modification mode requires to have _sortValue in the import file.

If these conditions are not met, the node cannot be imported in modification mode. The conditions includes being an autonomous node without a natural key or not being an autonomous node or being a vital child without a natural key of a node that also has no natural key.

When using a template for an import, the system will automatically disable the modification mode if a modification is impossible. If the_sortValue is mandatory to identify the record because no other natural key exist, the system will send you an error when an import in modification is performed.

Manage templates

Open: Administration > Import > Import and export templates

Creating a template is easy, as an automatic template generation is done by defining the entity to import.

When a creation happens, the Template columns section of the record is filled in by default.

If custom fields exist on the node, they appear in the template as normal fields with their name. In the CSV file, they appear in the following format: _customData(propertyName) where propertyName is the technical name given to the custom property.

Select the Generate template button to open a dialog box with your template. Select the CSV file's name to download it on your PC. You can then create data inside this file. You can use the template as it is and open it with Microsoft Excel.

You can also delete non-mandatory columns. If you do so, the created records have a default value depending on the business rules associated with the imported entity. In case of modification, they remain unchanged.

Managing templates with options

Some default templates such as stock entries and purchase receipts can include additional groups such as serial number details or lot details. The group related to serial numbers is only present if the corresponding serialNumberOption option is active on the Service options page.

As this option is not active by default, the provided default template does not include the data group related to non-active options. As these templates are provided as factory ones, you cannot modify them. You need to create a new template for the node you want to import and use this template. By default, it contains all the possible columns. So, if you generate your template, you will get probably more columns than what you need. You can then open your template in Excel and delete the columns and groups you don’t need. The number of columns you need to be able to import is reduced and mandatory columns are identified.

The template can contain columns that are not present in the file. However, you cannot have columns in the file that are not present in the template. This creates errors.

Import main entities

You can import any entity that supports CRUD actions and has a natural key. So, you can import most of the entities present in Sage Distribution and Manufacturing Operations. This section highlights data imported frequently during on-boarding projects.

Accounts

Accounts are used to generate the posting entries for documents generated by Sage Distribution and Manufacturing Operations. They are identified by a 2-part key: id and chart of account. These are references to a setup table provided with values such as US_DEFAULT, GB_DEFAULT, FR_DEFAULT, ZA_DEFAULT. These correspond to charts of accounts used for the different legislations covered by Sage Distribution and Manufacturing Operations.

You can import accounts in creation and update mode.

In the following import file example, you can enter 2 sub-lines: Attribute types and dimension types.

This example shows what happens when you try to import an allergen record on an item that is a chemical component.

|

!id |

!chartOfAccount |

isActive |

*name |

*isDirectEntryForbidden |

isControl |

taxManagement |

#attributeTypes |

*attributeType |

*isRequired |

#dimensionTypes |

*dimensionType |

*isRequired#1 |

|

string |

reference |

boolean |

string |

boolean |

boolean |

enum(other,includingTax,excludingTax,tax,reverseCharge) |

collection |

reference |

boolean |

collection |

reference |

boolean |

|

id |

chat of account (#setupId) |

is active (true/false) |

name |

is direct entry forbidden (true/false) |

is control (true/false) |

tax management |

attribute types |

attribute type (#id) |

is required (true/false) |

dimension types |

dimension type (#setupId) |

is required (true/false) |

|

12100 |

US_DEFAULT |

TRUE |

Accounts Receivable |

TRUE |

FALSE |

other |

|

|

|

|

|

|

|

12400 |

US_DEFAULT |

TRUE |

Shipped Not Invoiced Clearing |

FALSE |

FALSE |

other |

|

|

|

|

|

|

|

13100 |

US_DEFAULT |

TRUE |

Inventory |

FALSE |

FALSE |

other |

|

|

|

|

|

|

|

35500000 |

FR_DEFAULT |

TRUE |

Stock de produits finis |

FALSE |

FALSE |

other |

|

|

|

|

|

|

|

40110000 |

FR_DEFAULT |

TRUE |

Fournisseurs - Achats de biens |

TRUE |

TRUE |

includingTax |

|

|

|

|

|

|

Posting classes

Posting classes are categories used to define on which accounts the posting is done for entries linked to entities such as items, customers, suppliers, and so on.

You can import posting classes in creation and modification mode.

|

!id |

*type | *name |

isDetailed |

isStockItemAllowed |

isNonStockItemAllowed |

isServiceItemAllowed |

#line |

ChartOfAccount | *definition | *account |

|

string |

enum(item,supplier) | localized text |

boolean |

boolean |

boolean |

boolean |

collection | reference | reference | reference |

|

id |

type | default locale:base,other locales is active (true/false) |

is detailed (true/false) |

is stock item allowed |

is stock item allowed |

is service item allowed |

line | chart of account | definition (#legislation|postingClassType|id | account (#id|chartOfAccount) |

| COMPANY_DEFAULT | customer | Default | FALSE | 1 GB_DEFAULT | GB|company|DebtorRoundingVariance | 70500|GB_DEFAULT | ||||

| COMPANY_DEFAULT | customer | Default | FALSE | 2GB_DEFAULT | GB|company|CreditorrRoundingVariance | 70500|GB_DEFAULT | ||||

| COMPANY_DEFAULT | customer | Default | FALSE | 3FR_DEFAULT | GB|company|DebtorRoundingVariance | 66600000|GB_DEFAULT | ||||

| COMPANY_DEFAULT | customer | Default | FALSE | 4 FR_DEFAULT | GB|company|CreditorRoundingVariance | 76600000|GB_DEFAULT | ||||

| COMPANY_DEFAULT | customer | Default | FALSE | 5 ZA_DEFAULT | GB|company|DebtorRoundingVariance | 70500|GB_DEFAULT | ||||

| COMPANY_DEFAULT | customer | Default | FALSE | 6 ZA_DEFAULT | GB|company|CreditorRoundingVariance | 70500|GB_DEFAULT | ||||

| CUSTOMER_DEFAULT | customer | Default | FALSE | 1 GB_DEFAULT | GB|company|AR | 12100|GB_DEFAULT | ||||

| CUSTOMER_DEFAULT | customer | Default | FALSE | 2 US_DEFAULT | US|company|AR | 12100|GB_DEFAULT | ||||

| CUSTOMER_DEFAULT | customer | Default | FALSE | 3 FR_DEFAULT | FR|company|ARGsni | 41110000|GB_DEFAULT | ||||

| CUSTOMER_DEFAULT | customer | Default | FALSE | 4 ZA_DEFAULT | za|company|ar | 44751110|GB_DEFAULT | ||||

| CUSTOMER_DOMESTIC | customer | customer | TRUE | 1 FR_DEFAULT | FR|tax|Vat | 44751210|FR_DEFAULT | ||||

| FR_TVA_NORMAL_COLLECTED_ON_DEBITS | tax | reduced rate collected on debit | TRUE | 1 FR_DEFAULT | FR|tax|Vat | 44566230|FR_DEFAULT | ||||

| FR_TVA_NORMAL_COLLECTED_ON_PAYEMENT | tax | reduced rate collected on fixed assets | TRUE | 1 FR_DEFAULT | FR|tax|Vat | 44571130|FR_DEFAULT | ||||

| FR_TVA_NORMAL_DEDUCTIBLE_INTRASTAT | tax | Reduced rate deductible on intrastat | TRUE | 1 FR_DEFAULT | FR|tax|Vat | 44571230|FR_DEFAULT | ||||

| FR_TVA_NORMAL_DEDUCTIBLE_ON_DEBITS | tax | Reduced rate deductible on debit | TRUE | 1 FR_DEFAULT | FR|tax|Vat | 44566230|FR_DEFAULT | ||||

| FR_TVA_NORMAL_DEDUCTIBLE_ON_PAYEMENT | tax | Reduced rate deductible on payement | TRUE | 1 FR_DEFAULT | FR|tax|Vat | 44571130|FR_DEFAULT | ||||

| FR_TVA_NORMAL_COLLECTED_ON_PAYEMENT | tax | reduced rate collected on payement | TRUE | 1 FR_DEFAULT | FR|tax|Vat | 44571230|FR_DEFAULT | ||||

| FR_TVA_NORMAL_DEDUCTIBLE_ON_DEBITS | tax | Reduced rate deductible on debit | TRUE | 1 FR_DEFAULT | FR|tax|Vat | 44566130|FR_DEFAULT | ||||

| FR_TVA_NORMAL_DEDUCTIBLE_ON_PAYEMENT | tax | Reduced rate deductible on payement | TRUE | 1 FR_DEFAULT | FR|tax|Vat | 44566230|FR_DEFAULT | ||||

| ITEM_DEFAULT | item | Default | FALSE | FALSE | FALSE | FALSE | 1 GB_DEFAULT | GB|item|Goods ReceivedNotInvoiced | 44566230|GB_DEFAULT | |

| ITEM_DEFAULT | item | Default | FALSE | FALSE | FALSE | FALSE | 2 GB_DEFAULT | GB|item|StockIssue | 20680|GB_DEFAULT | |

| ITEM_DEFAULT | item | Default | FALSE | FALSE | FALSE | FALSE | 3 GB_DEFAULT | GB|item|CostOfGoods | 50100|GB_DEFAULT | |

| ITEM_DEFAULT | item | Default | FALSE | FALSE | FALSE | FALSE | 4 GB_DEFAULT | GB|item|ShippedNotInvoced | 12400|GB_DEFAULT | |

| ITEM_DEFAULT | item | Default | FALSE | FALSE | FALSE | FALSE | 5 GB_DEFAULT | GB|item|WorkInProgress | 13700|GB_DEFAULT | |

| ITEM_DEFAULT | item | Default | FALSE | FALSE | FALSE | FALSE | 6 GB_DEFAULT | GB|item|StockReceipt | 51800|GB_DEFAULT | |

| ITEM_DEFAULT | item | Default | FALSE | FALSE | FALSE | FALSE | 7 GB_DEFAULT | GB|item|StockedAdjustement | 51800|GB_DEFAULT | |

| ITEM_DEFAULT | item | Default | FALSE | FALSE | FALSE | FALSE | 8 GB_DEFAULT | GB|item|PayableNotInvoiced | 20680|GB_DEFAULT | |

| ITEM_DEFAULT | item | Default | FALSE | FALSE | FALSE | FALSE | 9 US_DEFAULT | US|item|GoodsReceivedNotInvoiced | 20680|US_DEFAULT | |

| ITEM_DEFAULT | item | Default | FALSE | FALSE | FALSE | FALSE | 10 US_DEFAULT | US|item|ShippedNotInvoiced | 50200|US_DEFAULT | |

| ITEM_DEFAULT | item | Default | FALSE | FALSE | FALSE | FALSE | 11 US_DEFAULT | US|item|CostOfGoods | 50100|US_DEFAULT | |

|

ITEM_DEFAULT |

item | Default | FALSE | FALSE | FALSE | FALSE | 12 US_DEFAULT | US|item|shippedNotInvoced | 12400|US_DEFAULT |

Companies

Companies are the legal entities that regroup sites. Two groups of data are present and mandatory to create companies with an import:

| description | *legislation | *chartOfAccount | siren | naf | rcs | legalFrom | *country | *currency | sequenceN |

| sting | reference | reference | string | string | string | enum(SARL) | reference | reference | string |

| description | legislation | chart of account | siren | naf | rcs | legal form | country | currency | sequenceN |

| British Company | GB | GB_DEFAULT | GB | GBP | GB | ||||

| American Company | US | US_DEFAULT | US | USD | US | ||||

| American Company | US | US_DEFAULT | US | USD | US | ||||

| French Company | FR | FR_DEFAULT | 123456789 | 12.34Z | RCS Lyon | SA | FR | EUR | FR |

| South African Company | ZA | ZA_DEFAULT | ZA | ZAR | ZA |

The addresses associated at sub-level with the American company having 2 addresses:

| #addresses | *isActivated#1 | *name#1 | addressLine1 | addressLine 2 | city region | postcode | country | *isPrimary | |

| collection | boolean | string | string | string | string | reference | boolean | IGNORE | |

| addresses | is active(true | name | address line1 | address line 2 | city region | postcode | country | is primary(false/true) | IGNORE |

| 1 | TRUE | C1-HEADQUARTER | 3, Scary street | SOUTHAMPTON | GB | TRUE | |||

| 1 | TRUE | MAIN PLANT | 4, Washington avenue | SEATTLE | US | TRUE | |||

| 2 | TRUE | C3-HEADQUARTER | 10, Jefferson Road | MOOREHEAD | US | FALSE | |||

| 1 | TRUE | C4-HEADQUARTER | Rue des Fleurs | GIVERNY | FR | FALSE | |||

| 1 | TRUE | C5-HEADQUARTER | 5, Mandela Square | CAPE TOWN | ZA | TRUE |

The contacts must be imported separately since version 42, associated to a CompanyContact node.

| !company | *address | *isActivated#1 | *title | *firstName | *lastName | role | *isPrimary1 | ||

| reference | reference | boolean | enum(ms,mr,dr,mrs) | string | string | enum(mainContact,commercialContact,finatialContact | string | boolean | IGNORE |

| company | address(#company|_sortValue) | is active(false/true) | title | first name | last name | role | is primary(false/true) | IGNORE | |

| C-UK | C-UK|10 | TRUE | ms | Jannie | Smith | mainContact | [email protected] | TRUE | |

| C-US | C-US|10 | TRUE | mr | John | DoDoe | mainContact | [email protected] | TRUE | |

| C-US | C-US|10 | TRUE | mr | John | Done | commercialContact | [email protected] | FALSE | |

| C-US | C-US|10 | TRUE | mr | John | Deere | mainContact | [email protected] | TRUE | |

| C-FR | C-FR|10 | TRUE | mr | Jerome | Crubellier | mainContact | [email protected] | TRUE | |

| C-ZA | C-ZA|10 | TRUE | mr | Nelson | Van Der Pool | mainContact | [email protected] | TRUE |

Contacts has the company address as reference and uses a two-part key. The company code and a numeric sort value (sortValue). During company import, addresses are created with the sequence 10, 20, 30 and so on. If addresses are created or imported separately, the rules are more complex. Exporting the data can tell you which values are used _sortValues.

After importing 4 companies and their associated address, running the extraction of these 4 companies can be done from the company main list.

| !id | #addresses | !_sortValue | name | addressLine1 | addressLine2 | City | locationPhoneNumber | isPrimary |

| C-FR | 10 | C4-HEADQUARTER | Rue des Fleurs | GIVERNY | TRUE | |||

| C-UK | 10 | C1-HEADQUARTER | 3 Scary Street | SOUTHAMPTON | TRUE | |||

| C-US | 10 | C3-HEADQUARTER |

4 Washington avenue |

SEATTLE | TRUE | |||

| C-US | 20 | MAIN PLANT | 10 Jefferson road | MOOREHEAD | FALSE | |||

| C-ZA | 10 | C5-HEADQUARTER | 5 Mandela Square | CAPE TOWN | TRUE |

The first address created for every company had 10 as a sortValue, the second address had a 20 _sortValue.

Item categories

Items can have categories such as Food or Chemical.You can import a unique group of data for item categories.

|

!id |

*name(en) |

*name(fr) |

type |

|

string |

localized text |

localized text |

enum(allergen,ghsClassification,none) |

|

id |

default locale: en-US |

default locale: fr-FR |

type |

|

FOOD |

Food |

Nourriture |

allergen |

|

CHEMICAL |

Chemical |

Produits chimiques |

ghsClassification |

|

MATERIAL |

Material |

Matériel |

none |

|

SERVICES |

Services |

Services |

none |

Items

You can import items by creating a template associated with the Item entity. This imports the following entities into a simple file:

-

Item contains the main information related to the item: name, description, type (good, service, food, or chemical), type of management (sold, manufactured, or purchased), default units, traceability information, default sales, purchase information, and accounting information.

-

ItemAllergen contains the list of allergens associated with the product for food.

-

ItemClassification contains the list of chemical classifications associated with the product for chemical products.

-

ItemSite contains item information per site such as default quantities, reordering policies, and the valuation method.

-

Valuation is a sub-level of detail per item and site. You cannot enter data on it even if it is present in the template.

|

!id |

*name |

description |

*status |

*type |

*stockUnit |

category |

*isStockManaged |

currency |

basePrice |

#allergens |

*allergen(setupId) |

#classifications |

*classification(setupId) |

#itemSites |

*site(Id) |

prodLeadTime |

safetyStock |

replenishmentMethod |

valuationMethod |

|

APPLE_PIE |

Apple pie |

Home made apple pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

12.3 |

1 |

EGG |

||||||||

|

APPLE_PIE |

Apple pie |

Home made apple pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

12.3 |

2 |

GLUTEN |

||||||||

|

APPLE_PIE |

Apple pie |

Home made apple pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

12.3 |

3 |

MILK |

||||||||

|

APPLE_PIE |

Apple pie |

Home made apple pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

12.3 |

1 |

S01-FR |

2 |

10 |

byReorderPoint |

standardCost |

||||

|

APPLE_PIE |

Apple pie |

Home made apple pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

12.3 |

2 |

S02-UK |

3 |

10 |

byMRP |

standardCost |

||||

|

APPLE_PIE |

Apple pie |

Home made apple pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

12.3 |

3 |

S03-US |

5 |

100 |

byMRP |

averageCost |

||||

|

APPLE_PIE |

Apple pie |

Home made apple pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

12.3 |

4 |

S04-ZA |

3 |

5 |

byMRP |

averageCost |

||||

|

CHERRY_PIE |

Cherry pie |

Home made cherry pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

12.3 |

1 |

EGG |

||||||||

|

CHERRY_PIE |

Cherry pie |

Home made cherry pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

12.3 |

2 |

GLUTEN |

||||||||

|

CHERRY_PIE |

Cherry pie |

Home made cherry pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

12.3 |

3 |

MILK |

||||||||

|

CHERRY_PIE |

Cherry pie |

Home made cherry pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

12.3 |

1 |

S01-FR |

3 |

50 |

byReorderPoint |

standardCost |

||||

|

CHERRY_PIE |

Cherry pie |

Home made cherry pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

12.3 |

2 |

S02-UK |

2 |

40 |

byMRP |

standardCost |

||||

|

CHERRY_PIE |

Cherry pie |

Home made cherry pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

12.3 |

3 |

S03-US |

1 |

30 |

byReorderPoint |

averageCost |

||||

|

CHERRY_PIE |

Cherry pie |

Home made cherry pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

12.3 |

4 |

S04-ZA |

6 |

20 |

byReorderPoint |

averageCost |

||||

|

BANANA_PIE |

Banana pie |

Home made banana pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

17.25 |

1 |

EGG |

||||||||

|

BANANA_PIE |

Banana pie |

Home made banana pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

17.25 |

2 |

GLUTEN |

||||||||

|

BANANA_PIE |

Banana pie |

Home made banana pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

17.25 |

1 |

S01-FR |

7 |

50 |

byReorderPoint |

standardCost |

||||

|

BANANA_PIE |

Banana pie |

Home made banana pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

17.25 |

2 |

S03-US |

1 |

30 |

byReorderPoint |

averageCost |

||||

|

BANANA_PIE |

Banana pie |

Home made banana pie |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

17.25 |

3 |

S02-ZA |

6 |

20 |

byMRP |

averageCost |

||||

|

EGGS |

Eggs |

Eggs |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

0.25 |

1 |

EGG |

||||||||

|

EGGS |

Eggs |

Eggs |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

0.25 |

1 |

S01-FR |

7 |

50 |

byReorderPoint |

standardCost |

||||

|

EGGS |

Eggs |

Eggs |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

0.25 |

2 |

S03-US |

1 |

30 |

byReorderPoint |

averageCost |

||||

|

EGGS |

Eggs |

Eggs |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

0.25 |

3 |

S02-ZA |

6 |

20 |

byMRP |

averageCost |

||||

|

PASTRY |

Puff pastry |

Pastry |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

1.5 |

1 |

EGG |

||||||||

|

APPLES |

Apples |

Sliced apples |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

3.5 |

2 |

S03-US |

1 |

30 |

byReorderPoint |

averageCost |

||||

|

APPLES |

Apples |

Sliced apples |

active |

good |

EACH |

FOOD |

TRUE |

EUR |

3.5 |

3 |

S02-ZA |

6 |

20 |

byMRP |

averageCost |

As the groups related to allergens, classifications, and itemSites are on the same level, you need to create them on different lines. There is no dependency between them. The item part remains on every line. These groups are optional and you can import them separately, linked to the ItemAllergen, ItemClassification, and ItemSites. This allows you to import items with only one group of data.

You need to import some data groups either separately or associated with other entities. It is the case for ItemCustomer, ItemSupplier, ItemCustomerPrice, ItemSiteSupplier, and ItemSiteCost entities and ItemPrice records.

Item sites

You can import an item site separately from the item import. You can do this in creation and update mode.

The item reference and the site reference fields are mandatory.

You cannot:

-

Use the value sub-group.

-

Import the stock valuation method. You can use the Item stock cost import to define costs per item.

In the following example, only the first three fields are mandatory.

|

!item |

!site |

valuationMethod |

prodLeadTime |

safetyStock |

batchQuantity |

replenishmentMethod |

reorderPoint |

preferredProcess |

|

APPLE_PIE |

S02-FR |

standardCost |

10 |

20 |

20 |

byMRP |

100 |

purchasing |

|

CHERRY_PIE |

S02-FR |

averageCost |

11 |

25 |

50 |

byMRP |

100 |

purchasing |

| BANANA_PIE | S02-FR | fifoCost | 12 | 30 | 100 | byMRP | 100 | purchasing |

Item site costs

The default cost of items used for the stock entries is stored in the Item site cost record.

|

*itemSite |

*costCategory |

fromDate |

toDate |

version |

forQuantity |

isCalculated |

materialCost |

machineCost |

laborCost |

toolCost |

indirectCost |

|

|

reference |

reference |

date |

date |

integer |

decimal |

boolean |

decimal |

decimal |